Choosing the Appropriate Model:

I first attempted to apply Kirkpatrick’s Four Levels of Evaluation:

1) Reactions: Measures how participants have reacted to the training.

2) Learning: Measure what participants have learned from the training.

3) Behaviour: Measures whether what participants learned is being applied on the job.

4) Results: Measure whether the application of training is achieving results.

Although Kirkpatrick’s methodology was developed specifically to evaluate employee training programs, I presumed the same concepts could be applied to this case study.

However, after attempting to apply Kirkpatrick’s model, I found that I did not have the required information to evaluate the ‘learning’ and ‘reactions’. The case study describes the program in detail, but does not include information about participants’ reactions during or after completion of the program.

I think attempted to apply a specifically health oriented approach by Public Health Agency of Canada. This model seems appropriate, but when compared to Shufflebeam’s CIPP model, awfully detailed for the task. Again, as with Kirkpatrick, were I charged with more information and perhaps access to the stakeholders themselves, it would be an excellent model to apply.

So, after considering various models, it is the Shufflebeam CIPP approach which best suits the project.

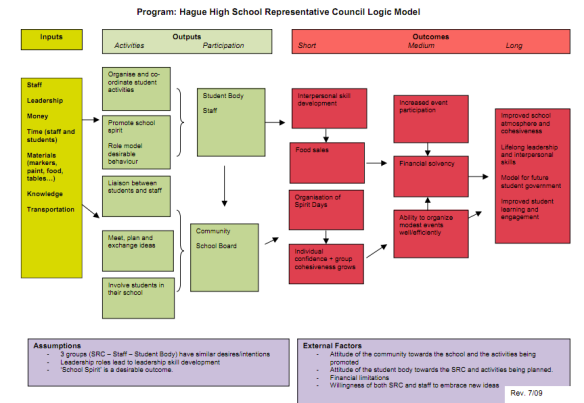

What should we do?

How should we do it?

Are we doing it as planned?

Did the program work?

Applying Shufflebeam’s CIPP Model

Context Evaluation: What should we do?

The goal of the program under evaluation is to “promote physical activity among Aboriginal women during their childbearing years”.

In order to meet this goal, a prenatal exercise program was developed for Aboriginal women in Saskatoon.

Accessible by public transportation, the program was free, led by a certified instructor and combined “both aerobic and mucle-toning-childbirth-preparation excercises” during 45 minute session. Activities included,”low-impact aerobics, water aerobics, selected exercise machines, line dancing…brisk walking” and water aerobics.

Weekly door prizes were offered, nutritious snacks were shared, “games, crafts or parties for special occasions were held”. Resources offering free education materials related to pregnancy and heath were also available.

It seems that in each case the activities were well matched to the program goals. ‘What needs to be done?’ falls into four main categories:

1) Offer an attractive program that is desirable and easy to get to.

2) Create an environment that is social and builds support networks.

3) Encourage self-education for high-risk Aboriginal women

4) Develop an effective exercise program.

Input Evaluation: How Should We Do It?

1) Offer an attractive program that is desirable and easy to get to (bus tickets, rent-free pool access, free childcare, free bathing suits, snacks and beverages)

2) Create an environment that is social and builds support networks. (games, crafts, parties, post-excercise social area, library, bringing a buddy)

3) Encourage self-education for high-risk Aboriginal women (Registered Nurse co-ordinator, Physiotherapist, resource table, library of books and videos for loan)

4) Develop an effective exercise program. (research based, certified instructor, addition of more water-based activities)

Process Evaluation: Are We Doing it as Planned? And if Not, Why Not?

From the response to the previous question, it seems that the National Health Research and Development Program has done an excellent job. Particularly impressive is their recruitment initially of 7% of the target population.

All activities seem ‘value-added’ and their link to the program goal is easily identifiable. The plan seems to go above and beyond the initial requirements.

Product Evaluation: Did it Work?

The program seems to be successful, particularly as participants began to ‘bring a buddy’ and asked for more water aerobics classes.

The breadth of the program is impressive however, there are many factors in a loaded question like ‘did it work?’. For example, it might be interesting to see the budget for the program and evaluate the cost effectiveness of the program.

It would also be interesting to know the value gained by all stakeholders (from participants to the YMCA) regarding partnerships and participation developed beyond the confines of this program. For example, did participants begin using other YMCA programs? and did the nutritious snacks supplied at the sessions influence the dietary habits of participants at home?

Reflection

The Shufflebeam model is straightforward and useable. It is open-ended and from the plethora of information available online, may be adapted to a variety of programs through the creation of specific sub-questions.

It seems that within this four step model, its brilliance lies in its simplicity.